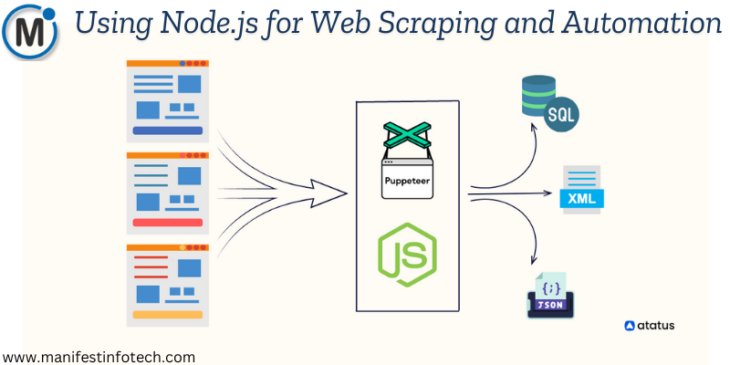

Web scraping and automation have become essential for gathering data, monitoring websites, and automating repetitive tasks. Node.js, with its non-blocking architecture and powerful libraries like Cheerio and Puppeteer, is an excellent choice for these tasks. In this guide, we will explore how to use Node.js for web scraping and automation, highlighting key libraries and providing code examples.

Why Use Node.js for Web Scraping?

Node.js is a great option for web scraping and automation due to the following benefits:

Asynchronous Execution: Handles multiple requests efficiently, reducing waiting times.

Lightweight and Fast: Suitable for real-time data extraction and automation.

Rich Ecosystem: Provides powerful libraries like Cheerio, Puppeteer, and Axios for scraping and automation.

Cross-Platform Compatibility: Works across different operating systems.

Setting Up the Environment

To begin, create a new Node.js project:

mkdir node-web-scraper && cd node-web-scraper

npm init -y

Installing Required Libraries

Install the necessary libraries for web scraping and automation:

npm install axios cheerio puppeteer

Axios: Makes HTTP requests to fetch web pages.

Cheerio: Parses and extracts data from HTML.

Puppeteer: Controls a headless browser for advanced automation.

Web Scraping with Cheerio

Cheerio is a lightweight library that parses HTML and allows us to extract data efficiently.

Example: Scraping Titles from a Website

const axios = require(“axios”);

const cheerio = require(“cheerio”);

const scrapeWebsite = async () => {

try {

const { data } = await axios.get(“https://example.com”);

const $ = cheerio.load(data);

const titles = [];

$(“h2”).each((index, element) => {

titles.push($(element).text());

});

console.log(“Scraped Titles:”, titles);

} catch (error) {

console.error(“Error scraping website:”, error);

}

};

scrapeWebsite();

Automating Tasks with Puppeteer

Puppeteer allows us to control a headless Chrome browser for automated interactions, such as filling forms or taking screenshots.

Example: Taking a Screenshot of a Website

const puppeteer = require(“puppeteer”);

const captureScreenshot = async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(“https://example.com”);

await page.screenshot({ path: “screenshot.png” });

await browser.close();

console.log(“Screenshot saved as screenshot.png”);

};

captureScreenshot();

Best Practices for Web Scraping

Respect Robots.txt: Always check the website’s robots.txt file before scraping.

Avoid Overloading Servers: Use rate limiting to prevent sending too many requests in a short time.

Use User Agents and Proxies: Prevent detection by websites that block scrapers.

Handle Errors Gracefully: Implement error handling to avoid crashes.

Conclusion

Node.js provides powerful tools for web scraping and automation, with libraries like Cheerio for HTML parsing and Puppeteer for browser automation. Whether you need to extract data or automate repetitive tasks, these tools offer efficient and scalable solutions. Start experimenting and unlock the full potential of Node.js for web scraping and automation!

If you are looking for any services related to Website Development, App Development, Digital Marketing and SEO, just email us at nchouksey@manifestinfotech.com or Skype id: live:76bad32bff24d30d

𝐅𝐨𝐥𝐥𝐨𝐰 𝐔𝐬:

𝐋𝐢𝐧𝐤𝐞𝐝𝐢𝐧: linkedin.com/company/manifestinfotech

𝐅𝐚𝐜𝐞𝐛𝐨𝐨𝐤: facebook.com/manifestinfotech/

𝐈𝐧𝐬𝐭𝐚𝐠𝐫𝐚𝐦: instagram.com/manifestinfotech/

𝐓𝐰𝐢𝐭𝐭𝐞𝐫: twitter.com/Manifest_info

#NodeJS #WebScraping #Automation #Puppeteer #Cheerio #JavaScript #DataExtraction #APIs #WebDevelopment #Coding #SoftwareEngineering #Scraper #Tech